What is a Data Quality API?

A data quality API is a software intermediary that serves requests and responses for various data quality functions. Data Quality APIs have different names like data quality firewall, real-time data quality validation, central data quality engine, etc. The API is triggered when an event takes place and routes incoming data through it for quality verification before directing it to the source database. This ensures that no data quality errors are migrated from the data-capturing application to the data-storing source. The API is built on event-driven architecture and can be used in proactive data quality approaches, where data is tested and treated before it is stored in the database.

Reference: David Loshin, in The Practitioner’s Guide to Data Quality Improvement, 2011

Why is data quality important?

Gartner research estimates organizations of all sizes lose about $13M annually in poor data quality with an average of 60% of businesses surveyed not measuring the cost of bad data. With tools like data quality APIs can help act as an intermediary between two applications and handles requests and responses being transmitted between them. In the context of data quality, a data quality API acts as a gatekeeper between a data-capturing application and a data storage source, ensuring that data quality errors are not migrated from one end to the other.

DataGovs API is built on event-driven architecture, which means it is triggered when an event occurs. For example, when new data is created or existing data is updated in a connected application, the update is first sent to the data quality API where it is verified for quality. If errors are found, a set of transformation rules are executed to clean the data. In some cases, a data quality steward may need to intervene to resolve issues where data values are ambiguous and cannot be well-processed by configured algorithms. Once the data has been cleaned and verified, it is then sent to the destination source.

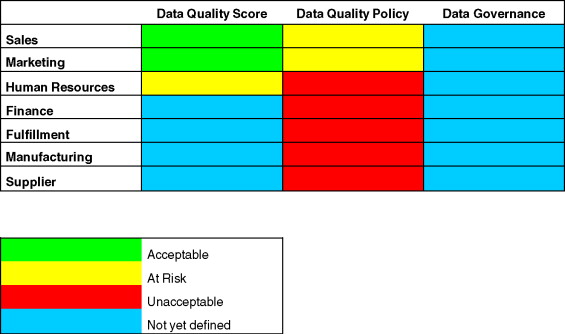

Example of a Data Quality Scorecard used at the API and JSON Level